Evidence-Based Medicine and Clinical Research: Both Are Needed, Neither Is Perfect

Key Messages

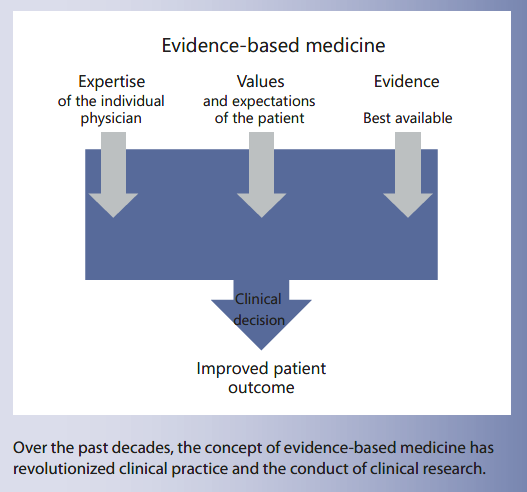

• Over the past 25 years, evidence-based medicine (EBM), defined as individual clinical expertise, best research evidence, and patient values and circumstances, has reshaped medical practice. EBM, however, is not perfect, and the concept is evolving in response to its critics.

• Much of clinical research is poorly designed, conducted, analyzed, and/or reported. Strategies for the development of high-quality research have been developed. If strictly adhered to by all stakeholders, this should lead to more valid and trustworthy findings.

• In the future, considering that new ways of obtaining health data will continue to emerge, the world of EBM and clinical research is likely to change. The ultimate goal, however, will remain the same: improving health outcomes for patients.

Keywords

Science · Research · Methodology · Evaluation · Critical appraisal

Abstract

Currently, it is impossible to think of modern healthcare that ignores evidence-based medicine (EBM), a concept which relies on 3 pillars: individual clinical expertise, the values and desires of the patient, and the best available research. How- ever, EBM is not perfect. Clinical research is also far from being perfect. This article provides an overview of the basic principles, opportunities, and controversies offered by EBM. It also summarizes current discussions on clinical research. Potential solutions to the problems of EBM and clinical research are discussed as well. If there were specific issues related to pediatric nutrition, an attempt was made to discuss the basic principles and limitations in this context. However, the conclusions are applicable to EBM and clinical research in general. In the future, considering that new ways of obtaining health data will continue to emerge, the world of EBM and clinical research is likely to change. The ultimate goal, however, will remain the same: improving health outcomes for patients.

Introduction

Currently, it is impossible to think of modern healthcare that ignores evidence-based medicine (EBM), a concept which relies on 3 pillars: individual clinical expertise, the values and desires of the patient, and the current best evidence (Fig. 1). However, EBM is not perfect. A widely cited expert, John Ioannidis, professor of epidemiology at Stanford University, claims that EBM is “becoming an industry advertisement tool.” Clinical research, one of the EBM pillars, is also far from perfect. Among the prominent figures in medicine who questioned research is Richard Horton, editor of The Lancet, who stated: “The case against science is straightforward: much of the scientific literature, perhaps half, may simply be untrue. Afflicted by studies with small sample sizes, tiny effects, in- valid exploratory analyses, and flagrant conflicts of interest, together with an obsession for pursuing fashionable trends of dubious importance, science has taken a turn towards darkness” [1]. Marcia Angell, who served as the editor of The New England Journal of Medicine for over 2 decades, wrote: “It is simply no longer possible to believe much of the clinical research that is published, or to rely on the judgment of trusted physicians or authoritative medical guidelines” [2]. Ioannidis, based on a theoretical model, concluded that “most published research findings are probably false” [3]. Earlier, he also argued that as much as 30% of the most influential original medical research papers later turn out to be mistaken or overstated [4]. The situation in pediatric research is particularly difficult. Interventions tested in adults but used in infants/ children may be ineffective, inappropriate, or harmful. A 2008 review, found in 6 leading journals, such as The New England Journal of Medicine, The Journal of the American Medical Association, Pediatrics, Archives of Pediatrics and Adolescent Medicine, Annals of Internal Medicine, and Archives of Internal Medicine, that compared studies in children with those in adults found that studies in children were significantly less likely to be randomized controlled trials (RCTs), systematic reviews, or studies of therapies. If such studies viewed as sources of the highest evidence are lacking, this has major implications for medical practice and the quality of care for children [5]. Even if the choice of journals and the time period assessed (3 months only) are unlikely to reflect all of the published literature, it points out a problem of many pediatric studies. This article provides an overview of the basic principles, opportunities, and controversies offered by EBM. It also summarizes current discussions on clinical research, and how to overcome problems with EBM and clinical research. If there were specific issues related to pediatric nutrition, an attempt was made to discuss the basic principles and limitations in this context. However, all conclusions are applicable to EBM and clinical research in general.

Fig. 1. Elements of the evidence-based medicine triad (expertise, values, and evidence) play a similar role in health decision-making.

History of EBM

More than a quarter of a century ago, in 1991, Gordon Guyatt, at that time a young resident at McMaster University, first coined the term “scientific medicine” (which euphemistically speaking was not well accepted by his senior colleagues), and then, the term “evidence-based medicine” [6]. Soon after, in 1996, his mentors, David Sackett and colleagues, all considered the fathers of EBM, defined it as “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients” [7] (Fig. 1). The concept which revolutionized clinical practice worldwide was born. It has changed and, in many ways, continues to change the medical world, providing healthcare professionals a tool to determine which treatments work and which do not. Thus, it is not surprising that, in 2007, when The BMJ chose the top 15 milestones in medicine over the last 160 years, EBM was one of them, next to achievements as anesthesia, antibiotics, the discovery of DNA structure, sanitation, vaccines, and oral rehydration solution [8].

Steps for Practicing EBM

The following 4 steps are needed for practicing EBM (Table 1): (1) formulation of an answerable question (the problem); (2) finding the best evidence; (3) critical appraisal of the evidence; and (4) applying the evidence to the treatment of patients.

Table 1. Steps for practicing EBM [48]

Hierarchy of Evidence

Although only a general guideline, a hierarchy (levels) of evidence provides useful guidance about which types of evidence, if well done, are more likely to provide trustworthy answers to clinical questions. Which hierarchy is appropriate depends upon the type of clinical question asked (Table 2). An extended hierarchy (levels) of evidence that includes diagnostic, prognostic, and screening studies in addition to therapeutic studies has been published by the Oxford Center for Evidence-Based Medicine [9]. Of note, irrespective of the type of question, systematic reviews are considered to offer the strongest evidence.

For intervention questions, systematic reviews and meta-analyses are followed by (in descending order of evidence strength) RCTs, cohort studies, case-control studies, case series studies, and lastly, expert opinions or theories, basic research, and animal studies (Fig. 2). Thus, if available, RCTs and meta-analyses should be used in support of clinical decision-making. If the best level of evidence is not available, moving to a lower level of evidence is needed. However, the lower a methodology ranks, the less robust the results and the less likely the findings of the study represent the objective findings; thus, there is less confidence that the intervention will result in the same health outcomes if used in clinical practice. At the base of the hierarchy is animal and basic research (cell/labora- tory studies). Discussing methodological problems of animal and cell research is beyond the scope of this article. However, a number of studies have revealed that animal experiments often fail to predict outcomes in humans. Tests on isolated cells can also produce different results to those performed in the body. On the other hand, many of the remarkable discoveries would not have been possible without vital experiments on animals (e.g., organ transplantation). Moreover, since 1901, nearly every Nobel Laureate in physiology or medicine, if not all, has relied on basic/animal data for their research!

Table 2. Different types of clinical questions require different types of research

Fig. 2. The hierarchy (levels) of evidence (in descending order of evidence strength). RCTs, randomized controlled trials.

Criticism of EBM

From the first publications related to EBM, accusations that EBM is “cookbook medicine” [10], “denigrates clinical expertise” [11], or “ignores patient’s values” [12] have been commonly used by critics. In a 2000 commentary, all of these criticisms (many of which were considered misperceptions of EBM) were addressed by Straus and McAlister [13]. However, the passage of time did not ease the criticism. Even more, nowadays, are concerns about the current direction of EBM being raised by many “EBM insiders.” Recently, Ioannidis challengingly stated that “evidence-based medicine has been hijacked” [14]. He says: “As EBM became more influential, it was also hijacked to serve agendas different from what it originally aimed for.” He also claims that clinical evidence is “be- coming an industry advertisement tool.” In brief, one of the concerns is the impact of industry on the way in which research is performed and reported, and consequently, the way in which medicine is practiced, and even the definition of what constitutes health or disease. Overuse and misuse of the term EBM is another concern. As Ioannidis pointed out, there are “eminence-based experts and conflicted stakeholders who want to support their views and their products, without caring much about the integrity, transparency, and unbiasedness of science.”

In 2014, Greenhalgh et al. [15], on behalf of the Evidence-Based Medicine Renaissance Group, provocatively asked in The BMJ: “Evidence based medicine: a movement in crisis?” The group provided a number of arguments to support this view. First, it includes misappropriation of EBM by vested interests. Indeed, under certain circumstances, industry involvement/funding (i.e., commercial interests) can introduce this bias. Second, the unmanageable volume of evidence, especially clinical guidelines, being produced represents a challenge. Third, statistically significant but clinically irrelevant benefits are being exaggerated. Fourth, contrary to the original concept, EBM is currently not patient-centered. Finally, the failure of EBM guidelines to properly address patients with multiple conditions (multi-morbidity) is a problem. The coexistence of 2 or more long-term conditions in 1 patient presents unique challenges. A “one size fits all” approach with evidence targeted to a single condition and treatment option is clearly inappropriate. In the pediatric setting, many diseases that were once deadly are now cured by modern medicine; however, these patients may face multiple, lifelong health problems.

Criticism of RCTs

Among the most heavily criticized aspects of EBM is the use of RCTs and meta-analyses. A randomized controlled trial is defined as an experiment in which 2 or more interventions are compared by being randomly allocated to participants. Large, well-designed, and well-implemented RCTs are considered the gold standard for evaluating the efficacy of healthcare interventions (therapy or prevention). RCTs are the best way to identify causal relationships and can determine efficacy (establish definitively which treatment methods are superior). The fact that they are less likely to be biased by known and unknown confounders is an added strength relative to all other study designs. However, there are some concerns with regard to RCTs. In addition to internal validity, which is the extent to which the design and conduct of a study is likely to have prevented bias or systematic error, the external validity, defined as the extent to which results may be generalized to other circumstances and beyond study populations [16], is one of the major concerns and is considered to be the Achilles’ heel of RCTs. One such example is studies carried out in term infants with results that may not be extrapolated to very-low-birth-weight infants.

RCTs in Pediatric Nutrition

With the increasing number of RCTs, investigators have recognized limitations of RCTs in the field of pediatric nutrition. First, critically relevant outcomes that relate to nutritional practices in infants/children may take years to become apparent. Therefore, it is difficult to de- sign and implement RCTs that last for a sufficient time to cover more than just a small fraction of the process lead-ing to the final outcome. Second, diet/nutritional and related exposures are complex and interrelated, and in most cases, short-term effects do not persist in the medium to long term. Most studies assess responses to single macro- or micronutrient changes, which may not reflect real-life conditions in which multiple exposures (deficiencies or potential excesses) coexist and interact to define specific outcomes. Third, the outcome of a trial is to show (or to fail to demonstrate) efficacy in achieving a desired out- come. Results of such trials can be simply and robustly interpreted in light of the specific intervention and the given study population. However, extrapolation to other populations or to interventions not exactly the same as those tested in the trial may be inappropriate. Table 3 summarizes main barriers to the conduct of randomized clinical trials.

Still, the results of RCTs may change medical practice. In pediatric nutrition research, one example of a clinical question that has been addressed by an RCT is: Does the fortification of human milk with either human milk-based human milk fortifier or bovine milk-based human milk fortifier (intervention) have an effect on the risk of necrotizing enterocolitis (NEC) (outcome) in extremely premature infants (population)? This RCT, involving 207 infants (birth weight: 500–1,250 g), showed that the groups receiving exclusively human milk had a significantly reduced risk of NEC and NEC needing surgical intervention. Lack of blinding was one of the limitations of the study. Still, the findings strongly support the use of human milk for reducing the risk of NEC in preterm infants [17].

Table 3. Main barriers to the conduct of randomized clinical trials

Criticism of Meta-Analyses

A meta-analysis begins with a systematic review, i.e., a review of a clearly formulated question that uses systematic and explicit methods to identify, select, and critically appraise relevant research, and to collect and analyze data from studies that are included in the review. However, in contrast to a systematic review alone, statistical techniques are then utilized to pool the results of all of the RCTs, so that they can be analyzed together. The primary reasons for performing a meta-analysis are to increase statistical power, thus, in-creasing the chance to reliably detect a clinically important difference, if such a difference exists, and to improve precision in estimating effects, en- abling the confidence interval around the effects to be narrowed. When determining whether results of individual trials should be pooled or kept separate, it is important to consider if the studies are sufficiently homogeneous in terms of both the question and the methods. The “PICO” acronym should ideally be the same (or very similar) across studies to be pooled: population, intervention, comparison, and outcome. Thus, it is always appropriate to perform a systematic review, and every meta-analysis should be preceded by a systematic review. However, not every systematic review should be finalized with a meta-analysis; in fact, it is sometimes erroneous and even misleading to perform a meta-analysis.

Since the beginning, meta-analyses have received criticism, being labeled as “mega-silliness” [18], “shmeta-analysis” [19], “statistical alchemy” [20], or “mixing apples and oranges” [21]. Despite these criticisms, the number of meta-analyses (including those in the field of pediatric nutrition) is increasing rapidly, and they are unlikely to disappear. However, hand in hand with the increasing number of meta-analyses is an increase in criticism. For example, concerns have been raised about the exponential increase in the number of meta-analyses. For some topics, there are more systematic reviews than individual trials included in these reviews. Ioannides recently stated “The production of systematic reviews has reached epidemic proportions. Possibly, most systematic reviews are unnecessary, misleading and/or conflicting” [22]. Possible flaws in a meta-analysis include failure to identify all relevant studies (that is why searching one data- base is never enough); risk of bias in the included trials (any meta-analysis is only as good as the constituent studies); exclusion of unpublished data (inclusion of unpublished data reduces the risk of publication bias); opposite conclusions (differences in the review question, search strategy, etc. may be responsible for this); and inconclusiveness (frustrating statements such as “no clear evidence” or “further research is needed”) [23].

Meta-Analyses in Pediatric Nutrition

Is this accusation of overproduction of systematic re- views/meta-analyses true for pediatric nutrition? In just 2 years (2016–2017), at least 14 published meta-analyses aimed to evaluate the effect of probiotics in reducing the risk of NEC, adding to a substantial number of previously published meta-analyses on the same topic [24]. Still, as most existing meta-analyses fail to adequately account for strain-specific effects, it remains unclear which probiotic strain(s) to use. This example shows that overproduction of systematic reviews/ meta-analyses may be a problem in pediatric nutrition as well. Considering that there are too many meta-analyses, perhaps it is time to go back to conducting large, multicenter, well-planned, and well-executed trials with well-defined outcomes. Something to be seriously considered by the co-authors of many published meta-analyses (such as myself!).

Problems with Clinical Research

In the era of EBM, the quality of clinical research, one of the pillars of EBM, is of paramount importance. However, as stated earlier, there are concerns that much of clinical research is poorly designed, conducted, analyzed, and/or reported. Consequently, the conclusions drawn from clinical research are probably false. Among the first to address this issue was Altman [25]. In a 1994 BMJ Editorial, he stated that “less research, better research, and research done for the right reasons” is needed. More recently, Ioannidis (alone or with colleagues) has published a large number of papers on this subject, including a highly cited publication “Why Most Published Research Find- ings Are False” [3]. Based on a mathematical model, Ioannidis concluded that more than 50% of published biomedical research findings with a p value of less than 0.5 are likely to be false positive. However, not everyone agrees. For example, Jager and Leek [26] in another paper (again, based on a statistical model) argued that only 14% of p values are likely to be false positive. While the discussions in regard to the extent of bias may continue, it nevertheless indicates that problems exist. Why does the quality of clinical research remain sub- optimal? Important factors to consider include the following [3] (see also Table 4).

Table 4. Interpretation of the research findings (when they are less likely to be true) (based on [3])

Sample Size and Underpowered Studies

The smaller the studies, the less likely the research findings are to be true. Low statistical power weakens the purpose of scientific research and reduces the chance of detecting a true effect. It also lowers the likelihood that a statistically significant result reflects a true effect.

Effect Size

When effect sizes are smaller (often the case in under- powered studies), the research findings are less likely to be true.

Unjustified Reliance on the p Value of 0.05.

Sole reliance on p values may be problematic. Both false-positive and false-negative results are possible. Of note, in March 2016, the American Statistical Association (ASA) warned against the misuse of p values. In its statement, the ASA advises researchers to avoid drawing scientific conclusions or making policy decisions based on p values alone [27].

Lack of Confirmation (Replication)

The reproducibility of research is a critical component of the scientific process. There are various factors that discourage simple repetition of studies, such as a lack of scientific novelty and/or a lack of interest in interventions that have been proven effective in a single study. Still, as a rule, repeat studies are needed, as a single study is, rightly, never sufficient to change clinical practice. If research findings cannot be replicated, it means they are less likely to be true. Discordance of findings does not indicate which results are correct. Both may be incorrect; however, it indicates that difficulties exist in replicating the original findings. Publishing of both positive and negative re- sults is important.

Pre-Selection

The greater the number of hypotheses tested, the more likely it is to find false-positive results. Similarly, the lesser the selection of tested relationships, the less likely the research findings are to be true.

Flexibility in Analysis

The more flexibility in study designs and in definitions, outcomes, and analyses, the less likely the research findings are true. Methodological rigor is likely to reduce the risk of biased findings.

“Hot” Areas of Science

The hotter the research field, the more scientific teams are likely to be involved in a particular field; this greater competition to find impressive results increases the likeli- hood of false findings.

Financial Interest

The greater the financial (and other) interests and prejudices in a scientific field, the less likely the research findings are to be true. In addition to financial and non-financial conflicts of interest (discussed below), this also includes manipulation of research data and fraud and/or selective outcome reporting.

In Bed with Industry

Ideally, the authors of any type of research should have no conflicts of interest. In the real world, however, this is not always the case. In many settings, research collaboration between academic investigators and industry is even encouraged by universities, public funding bodies, and governmental organizations. For example, Horizon 2020, the biggest EU Research and Innovation program, promotes collaboration with the ultimate goal of making it easier to deliver innovations.

In medicine, conflict of interest generally implies financial ties of the authors with industry. However, conflict of interest is a much broader matter. Examples of nonfinancial conflicts of interest include strongly held personal beliefs related to the research topic, personal relationships, institutional relationships, or a desire for career development [28]. Other players might also not be free of conflict of interest, either financial or nonfinancial. For example, lobbyists, patients’ associations, researchers, lawyers, medical writers, advertising and social net- working specialists, physicians, administrators, and policy makers may also be industry supported [29]. Taken together, while eliminating any conflict of interest of all parts involved might be an unrealistic goal, moving toward greater transparency and disclosure of potential or factual conflicts of interest should be a priority. Compared with non-industry-sponsored studies, industry- sponsored studies tended to have more favorable effectiveness and harm findings and more favorable conclusions [30].

In pediatric nutrition, funding of research by manufacturers of infant formulae may be considered even more controversial because of the need for protection and promotion of breastfeeding. However, in the case of studies involving infant formulae, industry involvement is unavoidable, as investigators lack the means to manufacture quality infant products. Still, independently funded re-search to obtain reliable evidence on the effects, safety, and benefits of drugs and other commercial products and services is a desirable goal.

What Can Be Done to Solve Problems with EBM?

As a response to “systematic bias, wastage, error and fraud relevant to patient care,” a group of prominent in- dividuals published the “EBM Manifesto for Better Health” (Table 5) [31]. Thus, the problems were not only identified, but an agenda for fixing EBM was proposed. The list of proposed solutions may not be complete. Thus, as part of the “EBM Manifesto,” anyone can contribute with suggestions through an online form. Overall, the solutions proposed by the authors of the “EBM Manifesto” are similar to those proposed by others [16]. EBM should have the care of an individual patient as the top priority. In line with the original understanding of EBM as the integration of the best research evidence with clinical expertise and patient values, individualized application of evidence to patients, with less reliance on following guidelines, is emphasized. Research should be clinically relevant, better reported, and applied. Critical appraisal skills training, while important, should not be done in isolation; shared decision-making skills are needed. The language, format, and presentation of evidence summaries and clinical guidelines should serve those who use the evidence-based information in real practice. Publishers and journal editors have the responsibility for ensuring that published materials can be used by healthcare professionals.

Table 5. EBM Manifesto for better health [31]

What Can Be Done to Improve Clinical Research?

Standards for Conducting Research

In 2014, The Lancet published a series of 5 articles called “Research: Increasing Value, Reducing Waste,” in which they discussed: (1) setting research priorities so that funding is based on issues relevant to users of research [32]; (2) research design, conduct, and analysis (key problems include poor protocols and designs, poor utility of information, statistical power and outcome misconception, and insufficient consideration of other evidence) [33]; (3) biomedical research regulation and man- agement [34]; (4) the role of fully accessible research in-formation [35]; and (5) incomplete or unusable research [36].

Standards for Conducting Pediatric Research

Independently, significant developments have taken place in recent years to strengthen pediatric clinical re- search. In 2009, the Standards for Research (StaR) in Child Health initiative was founded [37]. This initiative addressed the current paucity of and scarcities in pediatric clinical trials. The aim of the StaR is to improve the quality of the design, conduct, and reporting of pediatric clinical research by promoting the use of modern research standards, so that the evidence is methodologically robust and important.

Standards for Reporting Clinical Research

To avoid wasting time and resources, research needs to be not only well performed, but also adequately reported. There is evidence that incomplete and/or poor and/or inaccurate reporting of clinical research is a problem, thus, reducing its role in clinical decision-making. Frequent shortcomings in reporting include non-reporting or delayed reporting of whole studies; selective reporting of only some outcomes in relation to study findings; the omission of crucial information in the description of research methods and interventions; omissions or misinterpretation of results in the main article and abstract; inadequate or distorted reporting of harms; and confusing or misleading presentations of results, data, and graphs [38]. Being aware of the reporting problems, journal editors, together with other stakeholders such as methodologists, researchers, clinicians, and members of professional organizations, have developed a number of standards for reporting clinical research to ensure that all details of design, conduct, and analysis are included in the manuscript. Among others, these standards (all available at the EQUATOR Network website: www.equator-network.org) include the following: CONSORT (Consolidated Standards of Reporting Trials) [39]; PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [40]; STARD (Standards for the Reporting of Diagnostic Accuracy Studies) [41]; and MOOSE (Meta-Analysis of Observational Studies in Epidemiology) [42]. Endorsement of these reporting guidelines by journals is highly variable. However, increasingly, the editors now require that authors follow these standards when submitting a manuscript for publication.

Initiatives to Change How Scientists Are Evaluated

The current science evaluation system relies heavily on bibliometrics; thus, it promotes quantity over quality of research. However, there are a number of initiatives focusing on how to improve research quality by changing how scientists are evaluated and rewarded. One example is “The Leiden Manifesto” published in 2015 by a group of experts on altmetrics who identified 10 principles to guide better research evaluation [43] (Table 6). If followed, these criteria are likely to improve the integrity of research. At least some scientific institutions are already developing policies to encourage quality over quantity [44].

Table 6. The Leiden Manifesto for research metrics: 10 principles to guide research evaluation [43]

The Changing Face of Clinical Trials

Clinical research is evolving. In 2016, The New En- gland Journal of Medicine published a collection of articles called “The Changing Face of Clinical Trials” that examine the current challenges in the design, performance, and interpretation of clinical trials written by trialists for trialists. Among others, this series covers new trial designs. Recognition of the limitations of current re- search methods leads towards newer research designs. Examples include pragmatic trials (the major strength is that participants are broadly representative of people who will receive a treatment or diagnostic strategy, and there is potential for high generalizability) or adaptive trial designs (used by the investigators to alter basic features of an ongoing trial).

“Big Data” and “Real-World Data”

In the future, decision-making may be influenced by “big data” and “real-world data.” “Big data” (often characterized by 3 Vs: volume, velocity, and variety) is a term that describes the collection of extremely large sets of information, which require specialized computational tools to enable their analysis and exploitation [45]. A subset of “big data” is “real-world evidence” (also known as “real-world data”), defined as data derived from sources such as electronic health records, registries, hospital records, and health insurance data [46] (Fig. 3). “Real-world” data allow one to see whether a treatment works in “real” patients outside of clinical study settings [47]. However, as attractive as “big data” and “real-world data” may be, their real value and whether the evidence is unbiased remain unclear. As with a meta-analytical approach, these data will be only as good as they are accurate.

Fig. 3. Sources of “real-world data” (evidence).

Concluding Remarks

All health decisions should be based on high-quality scientific data. Thus, both EBM and clinical research are needed. However, neither EBM nor clinical research is perfect. Clinical research varies significantly in quality and, therefore, in the trustworthiness of the yielded evidence. A better understanding of the strengths and limitations of EBM and clinical research is vital. Solutions to fix EBM have been proposed. Similarly, strategies for the development of high-quality research have been developed. If strictly adhered to by researchers, academia, funding bodies, industry, journals, and publishers, this should lead to more valid and trustworthy findings. In the future, considering that new ways of obtaining health data will continue to emerge, the world of EBM and clinical research is likely to change. The ultimate goal, however, will remain the same: improving health outcomes for patients.